Test AI workflow

With your own dataset

Get your Maihem API key and install the SDK before you start.

Integrate with your codebase

1

Add target agent (if you haven't already)

2

Add a decorator to each step of your workflow

This is an example of a basic RAG workflow. Add a decorator to each step of the workflow as shown below.

See a full list of supported evaluators and metrics and their required input and output maps.

Example agent workflow in Python

Upload data and run test

4

Format your test data

Make sure the data you want to upload is in the following format:

3

Upload dataset

Your dataset called dataset_a will now be available to use in your tests.

4

Create test

5

Run the test

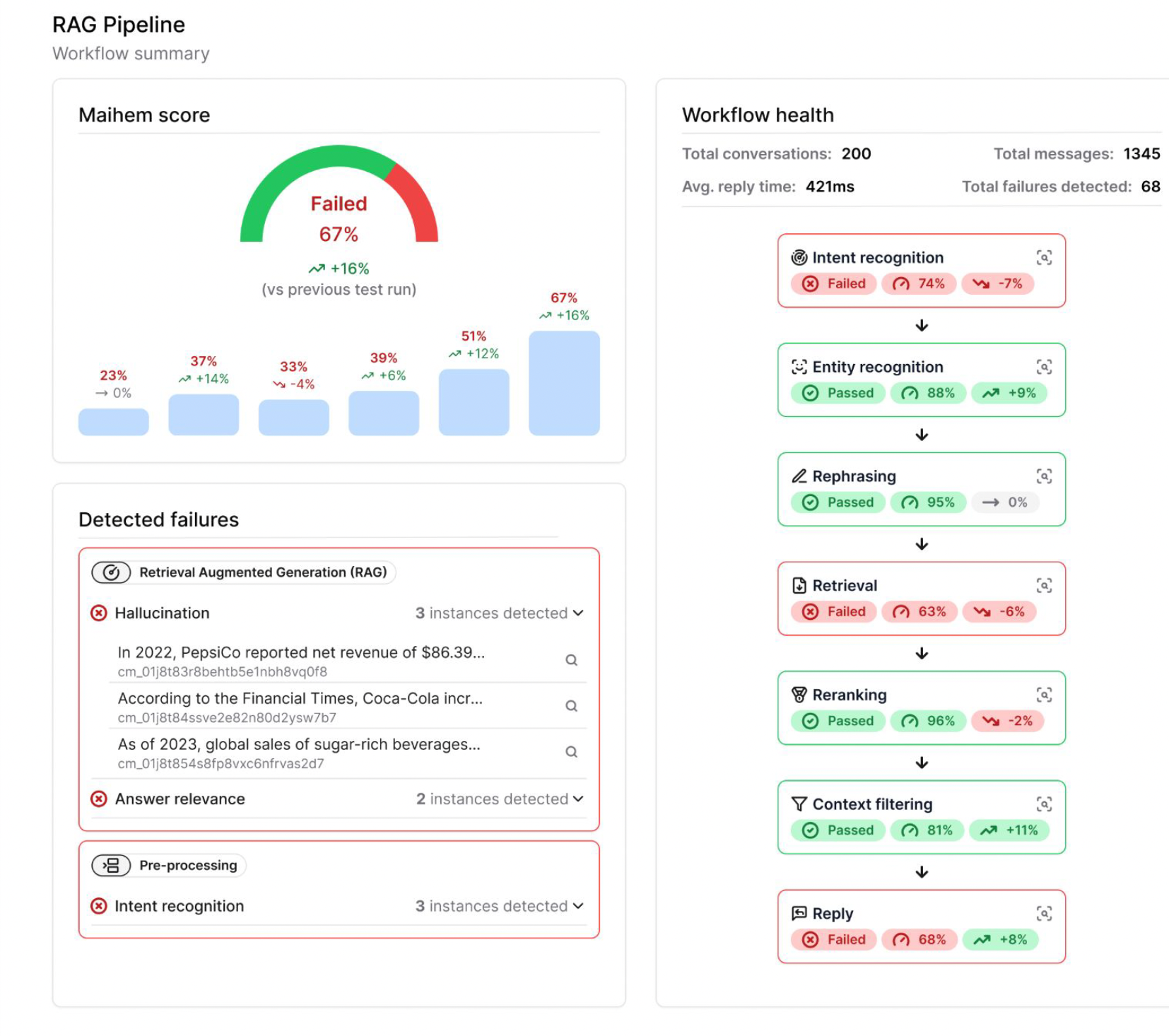

A test run will generate:

- Simulated conversations between your target agent and Maihem

- Evaluations of the conversations

- A list of detected failures

6